All about robots.txt

In a root directoy

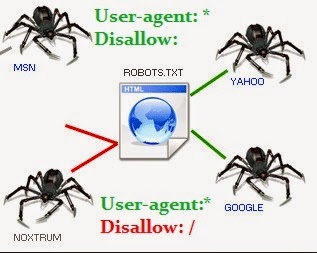

Owners of website use robots.txt file to give instructions of their site to web robots.

How it work?

web robots visit website URL like http://www.seoataffordable.blogspot.in/ before it does, it first check robots.txt

(http://seoataffordable.blogspot.in/robots.txt) on website, if it find:

User-agent: *

Disallow: /

"User-agent: *" it means applies to all robots and

"Disallow: /" it means robot not visit any webpages on the website.

Where located it?

In the top-level directory(root directory) of your web server.

It is case-sensitive means "robots.txt" and "Robots.TxT" both are different, always use in lower case like "/robots.txt"

The "/robots.txt" file is a text file, having one or more records. Usually contains a single record like this:

User-agent: *

Disallow: /cgi-bin/

Disallow: /tmp/

Disallow: /~joe/

In this example, three directories are excluded.

Note that you need a separate "Disallow" line for every URL prefix you want to exclude -- you cannot say "Disallow: /cgi-bin/ /tmp/" on a

single line. Also, you may not have blank lines in a record, as they are used to delimit multiple records.

Note also that globing and regular expression are not supported in either the User-agent or Disallow lines. The '*' in the User-agent

field is a special value meaning "any robot". Specifically, you cannot have lines like "User-agent: *bot*", "Disallow: /temp/*" or

"Disallow: *.jpg".

What you want to exclude depends on your server. all-thing are not explicitly disallowed is considered fair game to retrieve. Here follow

some examples:

To exclude all robots from the entire server

User-agent: *

Disallow: /

To allow all robots complete access

User-agent: *

Disallow:

To exclude all robots from part of the server

User-agent: *

Disallow: /tmp-bin/

Disallow: /temp/

Disallow: /spam/

To exclude a single robot

User-agent: BadBot

Disallow: /

To allow a single robot

User-agent: Google

Disallow:

User-agent: *

Disallow: /

To exclude all files except one

There is no "Allow" field. The easy way is to put all files to be disallowed into a separate directory, say "xyz", and leave the one file

in the level above this directory:

User-agent: *

Disallow: /~abc/xyz/

Some Alternatively you can explicitly disallow all disallowed pages:

User-agent: *

Disallow: /~abc/spam.html

Disallow: /~abc/fo.html

Disallow: /~abc/ar.html